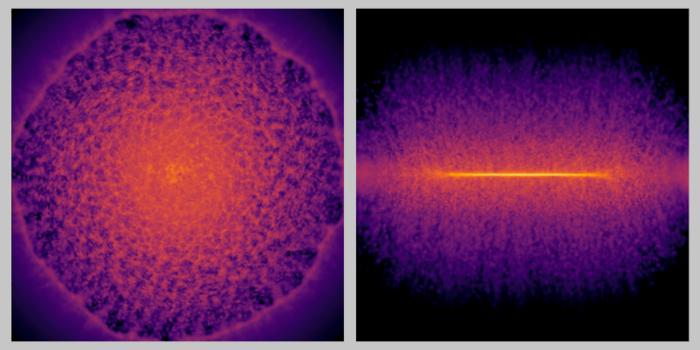

Researchers led by Keiya Hirashima at the RIKEN Center for Interdisciplinary Theoretical and Mathematical Sciences (iTHEMS) in Japan, with colleagues from The University of Tokyo and Universitat de Barcelona in Spain, have successfully performed the world’s first Milky Way simulation that accurately represents more than 100 billion individual stars over the course of 10 thousand years.

This feat was accomplished by combining artificial intelligence (AI) with numerical simulations. Not only does the simulation represent 100 times more individual stars than previous state-of-the-art models, but it was produced more than 100 times faster. Published in the international supercomputing conference SC ’25, this study represents a breakthrough at the intersection of astrophysics, high-performance computing, and AI. Beyond astrophysics, this new methodology can be used to model other phenomenon such as climate change and weather patterns.

Astrophysicists have been trying to create a simulation of the Milky Way Galaxy down to its individual stars, which could be used to test theories of galactic formation, structure, and stellar evolution against real observations. Accurate models of galaxy evolution are difficult because they must consider gravity, fluid dynamics, supernova explosions, and element synthesis, each of which occur on vastly different scales of space and time.

Until now, scientists have not been able to model large galaxies like the Milky Way while also maintaining a high star-level resolution. Current state-of-the-art simulations have an upper mass limit of about one billion suns, while the Milky Way has more than 100 billion stars. This means that the smallest “particle” in the model is really a cluster of stars massing 100 suns. What happens to individual stars is averaged out, and only large-scale events can be accurately simulated. The underlying problem is the number of years between each step in the simulation—fast changes at the level of individual stars, like the evolution of supernovae, can only be observed if the time between each snapshot of the galaxy is short enough.

But, processing smaller timesteps takes more time and more computational resources. Aside from the current state-of-the-art mass limit, if the best conventional physical simulation to date tried to simulate the Milky Way down to the individual star, it would need 315 hours for every 1 million years of simulation time. At that rate, simulating even 1 billion years of galaxy evolution would take more than 36 years of real time! But adding more and more supercomputer cores is not a viable solution. Not only do they use an incredible amount of energy, but more cores will not necessarily speed up the process because efficiency decreases.

In response to this challenge, Hirashima and his research team developed a new approach that combines a deep learning surrogate model with physical simulations. The surrogate model was trained on high-resolution simulations of a supernova and learned to predict how the surrounding gas expands in the 100,000 years after a supernova explosion, without using resources from the rest of the model. This AI shortcut enabled the simulation to simultaneously model the overall dynamics of the galaxy as well as fine-scale phenomena such as supernova explosions. To verify the simulation’s performance, the team compared the output with large-scale tests using the RIKEN’s supercomputer Fugaku and The University of Tokyo’s Miyabi Supercomputer System.

Until now, scientists have not been able to model large galaxies like the Milky Way while also maintaining a high star-level resolution. Current state-of-the-art simulations have an upper mass limit of about one billion suns, while the Milky Way has more than 100 billion stars. This means that the smallest “particle” in the model is really a cluster of stars massing 100 suns. What happens to individual stars is averaged out, and only large-scale events can be accurately simulated. The underlying problem is the number of years between each step in the simulation—fast changes at the level of individual stars, like the evolution of supernovae, can only be observed if the time between each snapshot of the galaxy is short enough.

But, processing smaller timesteps takes more time and more computational resources. Aside from the current state-of-the-art mass limit, if the best conventional physical simulation to date tried to simulate the Milky Way down to the individual star, it would need 315 hours for every 1 million years of simulation time. At that rate, simulating even 1 billion years of galaxy evolution would take more than 36 years of real time! But adding more and more supercomputer cores is not a viable solution. Not only do they use an incredible amount of energy, but more cores will not necessarily speed up the process because efficiency decreases.

In response to this challenge, Hirashima and his research team developed a new approach that combines a deep learning surrogate model with physical simulations. The surrogate model was trained on high-resolution simulations of a supernova and learned to predict how the surrounding gas expands in the 100,000 years after a supernova explosion, without using resources from the rest of the model. This AI shortcut enabled the simulation to simultaneously model the overall dynamics of the galaxy as well as fine-scale phenomena such as supernova explosions. To verify the simulation’s performance, the team compared the output with large-scale tests using the RIKEN’s supercomputer Fugaku and The University of Tokyo’s Miyabi Supercomputer System.

IMAGE CREDIT: RIKEN.

Leave a Reply